Plagiarism isn't just a problem for news articles in newspapers. It is an issue that has been plaguing the internet for a long time now.

You may create high-quality, original content. However, there’s always some other website out there, waiting to steal it and put it up as their own.

While this can be frustrating in itself, the problem amplifies when the website with the stolen content starts ranking higher up in the results on search engines.

They not only end up stealing your audience but even monetize it through scraped content, hence stealing your revenue too.

But, what exactly is scraped content? And how can you deal with it? Let’s find out!

Table of Contents

What is Scraped Content?

Scraped content, also known as content scraping, is a method of pulling content from websites using scripts. These scripts or web crawlers, extract the content from different sources and put them together on a single website.

People may steal your content for a variety of reasons. Some may want to make money out of affiliate marketing. Others may want to generate leads, or even increase ad revenue via rankings on search engines.

It is, however, important to understand the difference between syndication and scraping. Content scraping is when another website automatically uses your content without any prior permission.

On the other hand, syndication is when both parties agree to use the content under an agreement.

You have put a lot of effort into creating high-quality content. It sure didn’t come to you for free. And you have rights to protect it using copyright laws.

Before we get into how you can deal with content scrapers, let’s see how you can catch them first.

How to Find Content Scrapers

Finding and catching content scrapers may seem like a tough and time-consuming task. However, there are a few ways through which you can spot web scraping. Some of them are:

1. Perform a Google Search

This is the simplest way of finding those who’re doing web scraping your content. All you need to do is conduct a search on Google's search engine with the titles of your posts.

While it does sound like a horrible method of finding web scraping, there are good chances that you may come across a few.

2. Trackbacks

If you’ve put internal links in your posts, you will get a trackback every time a website scrapes your content and links back to you. This way, you’ll easily be able to spot the content scraper without much effort.

However, if you’ve enabled Akismet, many of these trackbacks may end up in your spam folder. You may need to skim through those to spot them as well. Remember, this method only works if you have internal links.

3. Google Webmaster Tools

Google Webmaster Tools one of the best free SEO audit tools, can help you find web scraping too. Under the “Traffic” section, you will see an option called “Links to Your Site.”

There are some good chances that there may be some web scraping in this list. They may have loads of links that are pointing to your pages.

How to Deal With Content Scraping

Now that you know how to find content scrapers, let’s see how you can deal with scraped content.

1. Adding Links

It is important to create as many internal links as possible within your website. These links point to your older articles on web pages that are relevant to the one readers are viewing currently.

Interlinking helps your readers find new articles with ease and also makes the web crawlers process easy for search engines.

However, it even helps when dealing with content scraping. When someone steals your content, they may keep these links intact. And that can help you get some free links from their website.

Adding links to keywords that make it tempting for the readers to click on it can reduce your bounce rate.

At the same time, when this article is scraped, the audience of the scraper’s website may click on it too. This way, you’ll end up stealing the scraper’s audience as well.

Yet another way of securing the links further is by adding the Yoast SEO plugin. It lets you add customized HTML codes to your RSS feed. Through this, you can add links to your website in it.

2. The Do Nothing Approach

This is the simplest approach and is pretty self-explanatory too. Fighting content and web scraping can indeed be time-consuming. You will end up spending time and energy that can be used to create more quality content.

However, do keep in mind that this may not be the best approach that you can take. If yours is a high-authority website, then you can concentrate on doing your work without paying heed to scraped content.

But if your website isn’t well-ranked on any search engine, it may not be the best approach for you. Google AdSense may end up flagging your website as the scraper if it thinks that your scrapers are the original websites.

3. Ping PubSubHubbub

Google may spot the scraped version of your content before it finds your post. However, it isn’t sure which post is the original one and which is copyright infringement.

If you ping PubSubHubbub, you will inform Google that you are the main source of the content and wrote it first. This helps your content from being labelled as copyright infringement.

If you use a platform such as WordPress.com or Blogger, you may not need to do this. However, if you’re using self-hosted WordPress, you can choose to install the PubSubHubbub plugin to simplify the process.

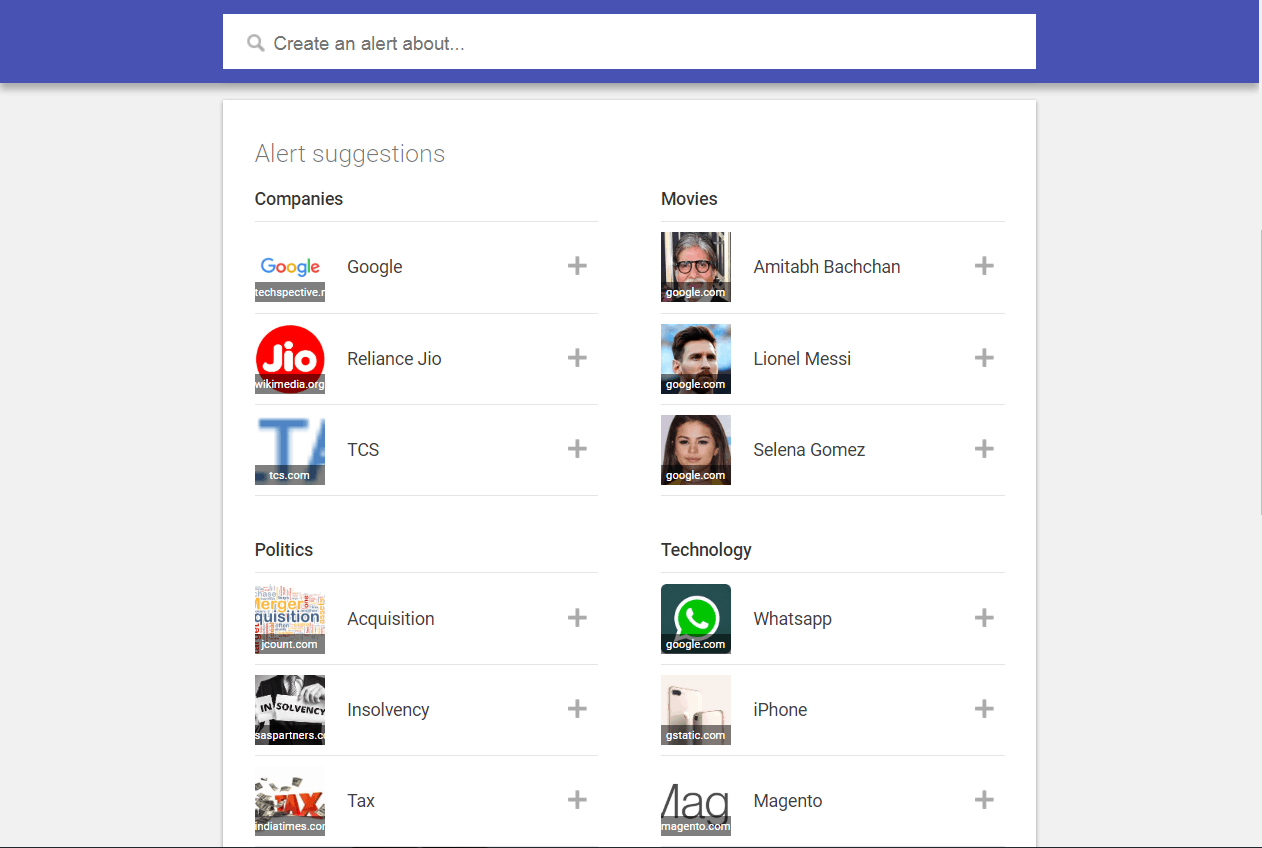

4. Use Google Alerts

Google Alerts, one of the best free Google tools, is a great way of getting notified whenever a certain keyword gets indexed on Google. While it can be of great use to find brand mentions, you can also use it to find content scrapers.

Every time you publish a post, you can set up a Google Alert for the exact title of your post. Whenever someone does content scraping and publishes a post with the same title, you’ll be notified.

You may also pick out some unique sentences from your post and set up an alert for that. That can also help you find them.

Image via Google Alerts

5. The “Kill Them All” Approach

You may decide to play it cool if you find that the scrapers are giving you free backlinks.

However, there may be other scrapers who remove the links after content scraping. They can strip it of all hyperlinks and you’ll end up losing all of your hard work.

When this happens, you need to use the “kill them all” approach. To do this, you’ll need to check your access logs and spot their IP address. You can then block the address in your root access file.

When you do this, they will no longer be able to steal content from you from that particular IP address.

Another way of doing it is by directly contacting the scraper and asking them to take the content down. You could even ask them to give you attribution as the original source of the content.

In case they don’t comply, you can file a Digital Millennium Copyright Act (DCMA) with their server host.

Yet another way of taking them down is by redirecting them to a dummy feed. In this feed, you can send them huge text feeds of gibberish or even send them back to their own server.

In the latter case, it’ll result in a loop that’ll cause their website to crash.

6. Stop Hotlinking Images

If you find out that people are doing content scraping from your RSS feed, then they may be stealing your bandwidth too. They may be doing this by stealing images from your website.

To stop them from doing so, you can make some changes to the .htaccess file of your website to disable image hotlinking.

7. Link Keywords With Affiliate Links

After content scraping, there are chances that you may lose some valuable traffic. However, you can take advantage of that by simply adding affiliate links to certain keywords.

You can automate this process by using plugins such as SEO Smart Links and Ninja Affiliate.

This way, while you may lose out on some traffic, you’ll still end up making affiliate income from it. For all you may know, you might be even taking advantage of the scraper’s audience.

8. Summary RSS Feed

When you have an RSS feed, you have the option to go for a full feed or a summary. One of the pros of having a summary RSS feed is the prevention of content scraping.

To do this, you can head to WordPress and under Settings, click on “Reading.” In the field “For each article in a feed show:” change the setting to “Summary.”

This way, only the summary for the RSS feed will be visible and the content scrapers won’t be able to scrape the complete feed.

9. Limit Individual IP Addresses

If you’re receiving loads of requests from a single computer then it may be a content scraper. One way of preventing content scraping is by blocking the attempts of computers that are trying to access your website too fast.

However, you also do need to keep in mind that some proxy services may present the traffic through a single IP address or domain registrar. This applies to VPNs and corporate networks too.

So you may end up blocking loads of legitimate users from accessing your website too in this process.

Additionally, scrapers who have better resources may circumvent this protection too. They may use multiple machines to run the domain registrar of the data scraping.

This way, only a few requests will come to your website from a single machine. Which makes it extremely difficult for you to block them out.

They may also choose to slow down their scraper so that it waits between requests. This way, it can disguise itself as a normal user.

10. Change HTML Frequently

Content scrapers mainly rely on finding some patterns in the HTML of a website. Using these patterns, they help their scripts find the right content from your website’s HTML.

However, if your website’s HTML changes frequently, then you may end up frustrating the scraper.

This is because they may have to keep finding patterns every now and then. Due to this, they may stop content scraping from your website.

While it may sound like a tedious task for you, it’s not necessary to change the website completely. Even changing something as simple as a class or id in HTML is enough to throw the scraper off track.

11. Create a Login For Access

HTTP by itself doesn’t preserve any information from each request. However, most HTTP clients may store session cookies. This makes it a very conducive environment for a scraper to work as it won’t need to identify itself.

However, things change when there is a need to log in to access the page. The scraper would need to send some information for identification in every single request to view the content.

This information can be very handy to trace them back and find out who is scraping your website.

While it doesn’t stop the content scraping, it can definitely help you identify those who are doing it.

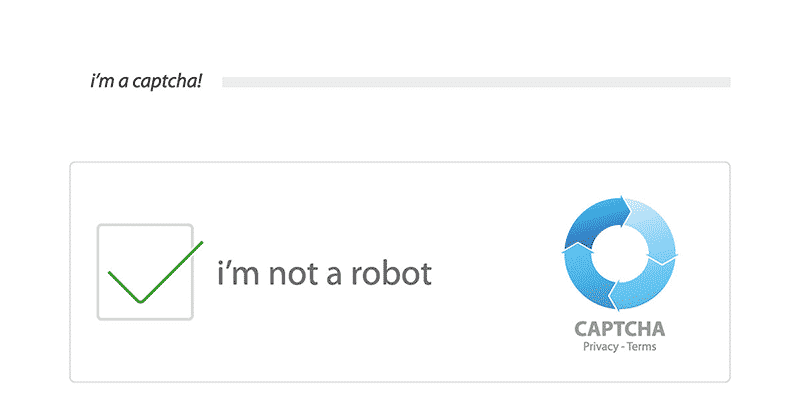

12. Use CAPTCHAs

The purpose of CAPTCHAs is to separate humans from computers. They present simple problems that humans can solve easily but computers find them hard to solve.

While this can help you separate the humans, it can also make you lose some valuable traffic.

The reason for this is simple. Humans find CAPTCHA extremely annoying to deal with. This is why you need to be careful when using it.

You could probably show the CAPTCHA only when a particular client has sent multiple requests in a short gap.

Image via Dropified

13. Make New “Honey Pot” Pages

Yet another way of dealing with content scraping is by coming up with honey pot pages. These are pages that humans will never visit. However, robots that would be clicking all the links on your website may end up there accidentally.

One way to avoid humans from opening it is by hiding it through the CSS command “display: none.” without the quotation marks of course.

Once the bots click on the URL and reach the honey pot page, you will find their information. Then, you can start blocking all the requests coming from that particular client.

14. Embed Information Inside Media

Content scrapers generally assume that they will be fetching a text string from an HTML file.

However, if the content of your website is embedded inside an image, movie, pdf, or other media files, it needs to fetch it from there. This, in itself, is a big task.

While this does help you prevent or reduce content scraping, it can affect your site speed as well. This is due to the larger size of the media files that will be used. It may also become less accessible for those who are blind or disabled.

Lastly, it will be difficult for you to update content easily when it has to be embedded inside the media.

Final Thoughts

Content scraping can be a pain to deal with and can even take valuable traffic away from your website, which can hinder your content marketing efforts.

However, by finding the right IP addresses of the scrapers, you can block them from accessing your website. And interlinking can help you get some backlinks from the scraper’s website.

You may even file a DMCA with their host when you find their IP address. Using CAPTCHAs can also stop the bots from entering your website.

Lastly, by cleverly inserting affiliate links into the text, you can make money from the scraper’s traffic too.

What methods do you use to deal with content scraping? Let us know in the comments below.

![21 awesome ecommerce content marketing examples for [year] 8 21 awesome ecommerce content marketing examples](https://shanebarker.com/wp-content/uploads/2021/12/100_-21-Awesome-Ecommerce-Content-Marketing-Examples.jpg)

Thank you for these very useful tips. Unfortunately, we need them :-/

Keep the good work and thanks again.

Hi Rose, thank you so much, I’m glad you liked my post about content scraping. Keep visiting for more such information.